流媒体服务框架ZLM4J发布1.0.8版本

🔥🔥🔥ZLM4J 打造属于Java的流媒体生态框架,打通直播协议栈、视频监控协议栈、实时音视频协议栈,是您二开流媒体不二的选择。

🌟发布 1.0.8(已上传到中央仓库无需自己编译!)

- 开源地址:https://gitee.com/aizuda/zlm4j

- 使用文档:https://ux5phie02ut.feishu.cn/wiki/NA2ywJRY2ivALSkPfUycZFM4nUB

<dependency> <groupId>com.aizuda</groupId> <artifactId>zlm4j</artifactId> <version>1.0.8</version> </dependency> 版本 1.0.8 更新日志:

- 拉取基于2024-05-29-master分支开发

- 发布jar到中央仓库

- 增加mk_proxy_player_create3,mk_proxy_player_create4函数配置拉流代理重试次数

- 废弃

mk_env_init1 - 更多记录请查看: 版本更新记录

实战打通海康SDK与ZLM4J实现超低延迟实时预览监控

1. 预备知识与工具

海康SDK、海康SDK对接知识、海康摄像头or海康NVR、ZLM4J、VLC播放器/flv.js播放器

2. 使用到的ZLM4J的功能

- 创建流、推送流功能

- 音频编码功能

- 拉流播放功能

- 按需转协议功能

3. 对接流程

- 初始化海康SDK及ZLM4J

- 海康SDK登录摄像头

- 开启摄像头实时预览及配置取流回调

- 创建ZLM4J对应流、并初始化音视频轨道

- 在回调的ps流中取到H264/H265裸码流及音频数据,并将音频数据解码为PCM

- 推送音视频流到ZLM4J中

- 使用VLC播放器/flv.js播放器播放并观察延迟

4. 相关代码

1-4步相关代码

public class RealPlayDemo { public static ZLMApi ZLM_API = Native.load("mk_api", ZLMApi.class); public static HCNetSDK hCNetSDK = Native.load("HCNetSDK", HCNetSDK.class); static int lUserID = 0; public static void main(String[] args) throws InterruptedException { //初始化zmk服务器 ZLM_API.mk_env_init2(1, 1, 1, null, 0, 0, null, 0, null, null); //创建http服务器 0:失败,非0:端口号 short http_server_port = ZLM_API.mk_http_server_start((short) 7788, 0); //创建rtsp服务器 0:失败,非0:端口号 short rtsp_server_port = ZLM_API.mk_rtsp_server_start((short) 7554, 0); //创建rtmp服务器 0:失败,非0:端口号 short rtmp_server_port = ZLM_API.mk_rtmp_server_start((short) 7935, 0); //初始化海康SDK boolean initSuc = hCNetSDK.NET_DVR_Init(); if (!initSuc) { System.out.println("海康SDK初始化失败"); return; } //登录海康设备 Login_V40("192.168.1.64", (short) 8000, "admin", "hk123456"); MK_INI mkIni = ZLM_API.mk_ini_create(); ZLM_API.mk_ini_set_option(mkIni, "enable_rtsp", "1"); ZLM_API.mk_ini_set_option(mkIni, "enable_rtmp", "1"); ZLM_API.mk_ini_set_option_int(mkIni, "auto_close", 1); //创建媒体 MK_MEDIA mkMedia = ZLM_API.mk_media_create2( "defaultVhost", "live", "test", 0, mkIni ); ZLM_API.mk_ini_release(mkIni); //这里分辨率、帧率、码率都可随便写 0是H264 1是h265 可以事先定义好 也可以放到回调里面判断编码类型让后再初始化这个 ZLM_API.mk_media_init_video(mkMedia, 0, 1, 1, 25.0f, 2500); ZLM_API.mk_media_init_audio(mkMedia, 2, 8000, 1, 16); ZLM_API.mk_media_init_complete(mkMedia); FRealDataCallback fRealDataCallBack = new FRealDataCallback(mkMedia, 25.0); HCNetSDK.NET_DVR_PREVIEWINFO netDvrPreviewinfo = new HCNetSDK.NET_DVR_PREVIEWINFO(); netDvrPreviewinfo.lChannel = 1; netDvrPreviewinfo.dwStreamType = 0; netDvrPreviewinfo.bBlocked = 0; netDvrPreviewinfo.dwLinkMode = 0; netDvrPreviewinfo.byProtoType = 0; //播放视频 long ret = hCNetSDK.NET_DVR_RealPlay_V40( lUserID, netDvrPreviewinfo, fRealDataCallBack, Pointer.NULL ); if (ret == -1) { System.out.println( "【海康SDK】开始sdk播放视频失败! 错误码:" + hCNetSDK.NET_DVR_GetLastError() ); return; } ZLM_API.mk_media_set_on_close( mkMedia, pointer -> { fRealDataCallBack.release(); hCNetSDK.NET_DVR_StopRealPlay(ret); System.out.println("流关闭自动释放资源"); }, Pointer.NULL ); //休眠 Thread.sleep(120000); // fRealDataCallBack.release(); // hCNetSDK.NET_DVR_StopRealPlay(ret); Logout(); } /** * 登录 * * @param m_sDeviceIP 设备ip地址 * @param wPort 端口号,设备网络SDK登录默认端口8000 * @param m_sUsername 用户名 * @param m_sPassword 密码 */ public static void Login_V40( String m_sDeviceIP, short wPort, String m_sUsername, String m_sPassword ) { /* 注册 */ // 设备登录信息 HCNetSDK.NET_DVR_USER_LOGIN_INFO m_strLoginInfo = new HCNetSDK.NET_DVR_USER_LOGIN_INFO(); // 设备信息 HCNetSDK.NET_DVR_DEVICEINFO_V40 m_strDeviceInfo = new HCNetSDK.NET_DVR_DEVICEINFO_V40(); m_strLoginInfo.sDeviceAddress = new byte[HCNetSDK.NET_DVR_DEV_ADDRESS_MAX_LEN]; System.arraycopy( m_sDeviceIP.getBytes(), 0, m_strLoginInfo.sDeviceAddress, 0, m_sDeviceIP.length() ); m_strLoginInfo.wPort = wPort; m_strLoginInfo.sUserName = new byte[HCNetSDK.NET_DVR_LOGIN_USERNAME_MAX_LEN]; System.arraycopy( m_sUsername.getBytes(), 0, m_strLoginInfo.sUserName, 0, m_sUsername.length() ); m_strLoginInfo.sPassword = new byte[HCNetSDK.NET_DVR_LOGIN_PASSWD_MAX_LEN]; System.arraycopy( m_sPassword.getBytes(), 0, m_strLoginInfo.sPassword, 0, m_sPassword.length() ); // 是否异步登录:false- 否,true- 是 m_strLoginInfo.bUseAsynLogin = false; // write()调用后数据才写入到内存中 m_strLoginInfo.write(); lUserID = hCNetSDK.NET_DVR_Login_V40(m_strLoginInfo, m_strDeviceInfo); if (lUserID == -1) { System.out.println( "登录失败,错误码为" + hCNetSDK.NET_DVR_GetLastError() ); return; } else { System.out.println("登录成功!"); // read()后,结构体中才有对应的数据 m_strDeviceInfo.read(); return; } } //设备注销 SDK释放 public static void Logout() { if (lUserID >= 0) { if (!hCNetSDK.NET_DVR_Logout(lUserID)) { System.out.println( "注销失败,错误码为" + hCNetSDK.NET_DVR_GetLastError() ); } System.out.println("注销成功"); hCNetSDK.NET_DVR_Cleanup(); return; } else { System.out.println("设备未登录"); hCNetSDK.NET_DVR_Cleanup(); return; } } } 5-6步相关代码

public class FRealDataCallback implements HCNetSDK.FRealDataCallBack_V30 { private final MK_MEDIA mkMedia; private final Memory buffer = new Memory(1024 * 1024 * 5); private int bufferSize = 0; private long pts; private double fps; private long time_base; private int videoType = 0; private int audioType = 0; public FRealDataCallback(MK_MEDIA mkMedia, double fps) { this.mkMedia = mkMedia; this.fps = fps; //ZLM以1000为时间基准 time_base = (long) (1000 / fps); //回调使用同一个线程 Native.setCallbackThreadInitializer( this, new CallbackThreadInitializer(true, false, "HikRealStream") ); } @Override public void invoke( long lRealHandle, int dwDataType, ByteByReference pBuffer, int dwBufSize, Pointer pUser ) throws IOException { //ps封装 if (dwDataType == HCNetSDK.NET_DVR_STREAMDATA) { Pointer pointer = pBuffer.getPointer(); int offset = 0; //解析psh头 psm头 psm标题 offset = readPSHAndPSMAndPSMT(pointer, offset); //读取pes数据 readPES(pointer, offset); } } /** * 读取pes及数据 * * @param pointer * @param offset */ private void readPES(Pointer pointer, int offset) { //pes header byte[] pesHeaderStartCode = new byte[3]; pointer.read(offset, pesHeaderStartCode, 0, pesHeaderStartCode.length); if ( (pesHeaderStartCode[0] & 0xFF) == 0x00 && (pesHeaderStartCode[1] & 0xFF) == 0x00 && (pesHeaderStartCode[2] & 0xFF) == 0x01 ) { offset = offset + pesHeaderStartCode.length; byte[] streamTypeByte = new byte[1]; pointer.read(offset, streamTypeByte, 0, streamTypeByte.length); offset = offset + streamTypeByte.length; int streamType = streamTypeByte[0] & 0xFF; //视频流 if (streamType >= 0xE0 && streamType <= 0xEF) { //视频数据 readVideoES(pointer, offset); } else if ((streamType >= 0xC0) & (streamType <= 0xDF)) { //音频数据 readAudioES(pointer, offset); } } } /** * 读取视频数据 * * @param pointer * @param offset */ private void readVideoES(Pointer pointer, int offset) { byte[] pesLengthByte = new byte[2]; pointer.read(offset, pesLengthByte, 0, pesLengthByte.length); offset = offset + pesLengthByte.length; int pesLength = ((pesLengthByte[0] & 0xFF) << 8) | (pesLengthByte[1] & 0xFF); //pes数据 if (pesLength > 0) { byte[] pts_dts_length_info = new byte[3]; pointer.read(offset, pts_dts_length_info, 0, pts_dts_length_info.length); offset = offset + pts_dts_length_info.length; int pesHeaderLength = (pts_dts_length_info[2] & 0xFF); //判断是否是有pts 忽略dts int i = (pts_dts_length_info[1] & 0xFF) >> 6; if (i == 0x02 || i == 0x03) { //byte[] pts_dts = new byte[5]; //pointer.read(offset, pts_dts, 0, pts_dts.length); //这里获取的是以90000为时间基的 需要转为 1/1000为基准的 但是pts还是不够平滑导致画面卡顿 所以不采用读取的pts //long pts_90000 = ((pts_dts[0] & 0x0e) << 29) | (((pts_dts[1] << 8 | pts_dts[2]) & 0xfffe) << 14) | (((pts_dts[3] << 8 | pts_dts[4]) & 0xfffe) >> 1); pts = time_base + pts; } offset = offset + pesHeaderLength; byte[] naluStart = new byte[5]; pointer.read(offset, naluStart, 0, naluStart.length); //nalu起始标志 if ( (naluStart[0] & 0xFF) == 0x00 && (naluStart[1] & 0xFF) == 0x00 && (naluStart[2] & 0xFF) == 0x00 && (naluStart[3] & 0xFF) == 0x01 ) { if (bufferSize != 0) { //获取nalu类型 int naluType = (naluStart[4] & 0x1F); //如果是sps pps不需要变化pts if (naluType == 7 || naluType == 8) { pts = pts - time_base; } if (videoType == 0x1B) { //推送帧数据 ZLM_API.mk_media_input_h264( mkMedia, buffer.share(0), bufferSize, pts, pts ); } else if (videoType == 0x24) { //推送帧数据 ZLM_API.mk_media_input_h265( mkMedia, buffer.share(0), bufferSize, pts, pts ); } bufferSize = 0; } } int naluLength = pesLength - pts_dts_length_info.length - pesHeaderLength; byte[] temp = new byte[naluLength]; pointer.read(offset, temp, 0, naluLength); buffer.write(bufferSize, temp, 0, naluLength); bufferSize = naluLength + bufferSize; } } /** * 读取音频数据 * * @param pointer * @param offset */ private void readAudioES(Pointer pointer, int offset) { byte[] pesLengthByte = new byte[2]; pointer.read(offset, pesLengthByte, 0, pesLengthByte.length); offset = offset + pesLengthByte.length; int pesLength = ((pesLengthByte[0] & 0xFF) << 8) | (pesLengthByte[1] & 0xFF); //pes数据 if (pesLength > 0) { byte[] pts_dts_length_info = new byte[3]; pointer.read(offset, pts_dts_length_info, 0, pts_dts_length_info.length); offset = offset + pts_dts_length_info.length; int pesHeaderLength = (pts_dts_length_info[2] & 0xFF); //判断是否是有pts 忽略dts int i = (pts_dts_length_info[1] & 0xFF) >> 6; long pts_90000 = 0; if (i == 0x02 || i == 0x03) { byte[] pts_dts = new byte[5]; pointer.read(offset, pts_dts, 0, pts_dts.length); //这里获取的是以90000为时间基的 需要转为 1/1000为基准的 但是pts还是不够平滑导致画面卡顿 所以不采用读取的pts pts_90000 = ((pts_dts[0] & 0x0e) << 29) | ((((pts_dts[1] << 8) | pts_dts[2]) & 0xfffe) << 14) | ((((pts_dts[3] << 8) | pts_dts[4]) & 0xfffe) >> 1); //pts = time_base + pts; } offset = offset + pesHeaderLength; int audioLength = pesLength - pts_dts_length_info.length - pesHeaderLength; byte[] bytes = G711ACodec._toPCM( pointer.getByteArray(offset, audioLength) ); Memory temp = new Memory(bytes.length); temp.write(0, bytes, 0, bytes.length); ZLM_API.mk_media_input_pcm( mkMedia, temp.share(0), bytes.length, pts_90000 ); temp.close(); } } /** * 读取psh头 psm头 psm标题 及数据 * * @param pointer * @param offset * @return */ private int readPSHAndPSMAndPSMT(Pointer pointer, int offset) { //ps头起始标志 byte[] psHeaderStartCode = new byte[4]; pointer.read(offset, psHeaderStartCode, 0, psHeaderStartCode.length); //判断是否是ps头 if ( (psHeaderStartCode[0] & 0xFF) == 0x00 && (psHeaderStartCode[1] & 0xFF) == 0x00 && (psHeaderStartCode[2] & 0xFF) == 0x01 && (psHeaderStartCode[3] & 0xFF) == 0xBA ) { byte[] stuffingLengthByte = new byte[1]; offset = 13; pointer.read(offset, stuffingLengthByte, 0, stuffingLengthByte.length); int stuffingLength = stuffingLengthByte[0] & 0x07; offset = offset + stuffingLength + 1; //ps头起始标志 byte[] psSystemHeaderStartCode = new byte[4]; pointer.read( offset, psSystemHeaderStartCode, 0, psSystemHeaderStartCode.length ); //PS system header 系统标题 if ( (psSystemHeaderStartCode[0] & 0xFF) == 0x00 && (psSystemHeaderStartCode[1] & 0xFF) == 0x00 && (psSystemHeaderStartCode[2] & 0xFF) == 0x01 && (psSystemHeaderStartCode[3] & 0xFF) == 0xBB ) { offset = offset + psSystemHeaderStartCode.length; byte[] psSystemLengthByte = new byte[1]; //ps系统头长度 pointer.read(offset, psSystemLengthByte, 0, psSystemLengthByte.length); int psSystemLength = psSystemLengthByte[0] & 0xFF; //跳过ps系统头 offset = offset + psSystemLength; pointer.read( offset, psSystemHeaderStartCode, 0, psSystemHeaderStartCode.length ); } //判断是否是psm系统头 则为IDR帧 if ( (psSystemHeaderStartCode[0] & 0xFF) == 0x00 && (psSystemHeaderStartCode[1] & 0xFF) == 0x00 && (psSystemHeaderStartCode[2] & 0xFF) == 0x01 && (psSystemHeaderStartCode[3] & 0xFF) == 0xBC ) { offset = offset + psSystemHeaderStartCode.length; //psm头长度可以 byte[] psmLengthByte = new byte[2]; pointer.read(offset, psmLengthByte, 0, psmLengthByte.length); int psmLength = ((psmLengthByte[0] & 0xFF) << 8) | (psmLengthByte[1] & 0xFF); //获取音视频类型 if (videoType == 0 || audioType == 0) { //自定义复合流描述 byte[] detailStreamLengthByte = new byte[2]; int tempOffset = offset + psmLengthByte.length + 2; pointer.read( tempOffset, detailStreamLengthByte, 0, detailStreamLengthByte.length ); int detailStreamLength = ((detailStreamLengthByte[0] & 0xFF) << 8) | (detailStreamLengthByte[1] & 0xFF); tempOffset = detailStreamLength + detailStreamLengthByte.length + tempOffset + 2; byte[] videoStreamTypeByte = new byte[1]; pointer.read( tempOffset, videoStreamTypeByte, 0, videoStreamTypeByte.length ); videoType = videoStreamTypeByte[0] & 0xFF; tempOffset = tempOffset + videoStreamTypeByte.length + 1; byte[] videoStreamDetailLengthByte = new byte[2]; pointer.read( tempOffset, videoStreamDetailLengthByte, 0, videoStreamDetailLengthByte.length ); int videoStreamDetailLength = ((videoStreamDetailLengthByte[0] & 0xFF) << 8) | (videoStreamDetailLengthByte[1] & 0xFF); tempOffset = tempOffset + videoStreamDetailLengthByte.length + videoStreamDetailLength; byte[] audioStreamTypeByte = new byte[1]; pointer.read( tempOffset, audioStreamTypeByte, 0, audioStreamTypeByte.length ); audioType = audioStreamTypeByte[0] & 0xFF; } offset = offset + psmLengthByte.length + psmLength; } } return offset; } /** * 释放资源 * * @return */ public void release() { ZLM_API.mk_media_release(mkMedia); buffer.close(); } } 5. 预览画面与延迟对比

1. 观察到对应的媒体流已经注册上去,即可使用播放器观看

2024-05-30 14:38:48.514 I [java.exe] [13388-event poller 0] MediaSource.cpp:517 emitEvent | 媒体注册:fmp4://defaultVhost/live/test 2024-05-30 14:38:48.514 I [java.exe] [13388-event poller 0] MultiMediaSourceMuxer.cpp:561 onAllTrackReady | stream: schema://defaultVhost/app/stream , codec info: H264[2688/1520/25] mpeg4-generic[8000/1/16] 2024-05-30 14:38:48.514 I [java.exe] [13388-event poller 0] MediaSource.cpp:517 emitEvent | 媒体注册:rtsp://defaultVhost/live/test 2024-05-30 14:38:48.514 I [java.exe] [13388-event poller 0] MediaSource.cpp:517 emitEvent | 媒体注册:rtmp://defaultVhost/live/test 2024-05-30 14:38:48.515 I [java.exe] [13388-event poller 0] MediaSource.cpp:517 emitEvent | 媒体注册:ts://defaultVhost/live/test 2024-05-30 14:38:52.080 I [java.exe] [13388-event poller 0] MediaSource.cpp:517 emitEvent | 媒体注册:hls://defaultVhost/live/test 2. 使用WS-FLV协议与直接使用摄像头RTSP协议播放对比

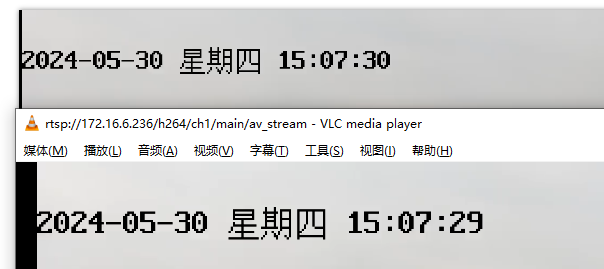

3. 使用WS-FLV协议与摄像头管理界面播放对比

4. 可以看到与摄像头RTSP协议对比画面快1-2s左右,与摄像头管理界面对比画面基本一样。

6. 总结

通过实战打通海康SDK与ZLM4J实现超低延迟实时预览监控案例,我们可以学到ZLM4J的接入流程和简单使用步骤,通过这个示例展示集成流媒体的带来的强大功能,完整项目我已上传至GITEE: https://gitee.com/daofuli/zlm4j_hk,后续将分享更多ZLM4J使用案例。

还没有评论,来说两句吧...